Overview

Is backpropagation the ultimate tool on the path to achieving artificial intelligence as its success and widespread adoption would suggest?

Many have questioned the biological plausibility of backpropagation as a learning mechanism since its discovery. The weight transport and timing problems are the most disputable. The same properties of backpropagation training also have practical consequences. For instance, backpropagation training is a global and coupled procedure that limits the amount of possible parallelism and yields high latency.

These limitations have motivated us to discuss possible alternative directions. In this workshop, we want to promote such discussions by bringing together researchers from various but related disciplines, and to discuss possible solutions from engineering, machine learning and neuroscientific perspectives.

Schedule

Throughout the day we will livestream invited talks, contributed talks, and live Q&A session. We will also have two virtual poster sessions in [Gather.Town]. Note, that our Gather.Town is open throughout the entire workshop, so feel free to hangout and talk to other participants!

All times listed are in PST.

If you have questions for our speakers or a topic to discuss, please post them [here].

| 6:00 - 6:15 | Opening Remarks | |

| 6:15 - 6:45 | Bastiaan Veeling - Bio-Constrained Intelligence and The Great Filter | [Video] |

| 6:45 - 7:15 | Olivier Teytaud - Gradient-Free Learning (with Nevergrad) | [Video] |

| 7:15 - 8:30 | First Poster Session | [Gather] |

| 8:30 - 9:00 | Karl Friston - Active Inference and Artificial Curiosity | [Video] |

| 9:00 - 9:45 | Live Panel Discussion with Bastiaan Veeling, Olivier Teytaud and Karl Friston | |

| 9:45 - 11:00 | Break | |

| 11:00 - 11:32 | Yoshua Bengio - Towards bridging the Gap between Backprop and Neuroscience | [Video] |

| 11:32 - 12:08 | Danielle Bassett - A Story from the Human World | [Video] |

| 12:09 - 12:40 | Contributed Talks (1) | |

| 12:10 - 12:22 | Randomized Automatic Differentiation - Deniz Oktay, Nick B McGreivy, Alex Beatson, Ryan Adams | [Video] |

| 12:22 - 12:35 | ZORB: A Derivative-Free Backpropagation Algorithm for Neural Networks - Varun Ranganathan, Alex Lewandowski | [Video] |

| 12:35 - 12:40 | Live Q&A Contributed Talks (1) | |

| 12:40 - 12:45 | Break | |

| 12:45 - 13:15 | Contributed Talks (2) | |

| 12:46 - 12:59 | Policy Manifold Search for Improving Diversity-based Neuroevolution - Nemanja Rakicevic, Antoine Cully, Petar Kormushev | [Video] |

| 12:59 - 13:12 | Hardware Beyond Backpropagation: a Photonic Co-Processor for Direct Feedback Alignment - Julien Launay, Iacopo Poli, Kilian Muller, Igor Carron, Laurent Daudet, Florent Krzakala, Sylvain Gigan | [Video] |

| 13:12 - 13:15 | Live Q&A Contributed Talks (2) | |

| 13:15 - 13:45 | David Duvenaud - Hypernets and the Inverse Function Theorem | [Video] |

| 13:45 - 14:15 | Cristina Savin - New (Old) Tricks for Online Metalearning | [Video] |

| 14:15 - 15:00 | Live Panel Discussion with Yoshua Bengio, Danielle Bassett, David Duvenaud and Cristina Savin | |

| 15:00 - 16:30 | Second Poster Session | [Gather] |

Speakers

Organizers

Yanping Huang

GoogleResearch: scalable learning, reinforcement learning, computational neuroscience.

Viorica Pătrăucean

DeepmindResearch: computer vision, scalable learning, biologically plausible learning.

Grzegorz Świrszcz

DeepmindResearch: machine learning, dynamical systems, mathematical modeling.

Accepted Papers

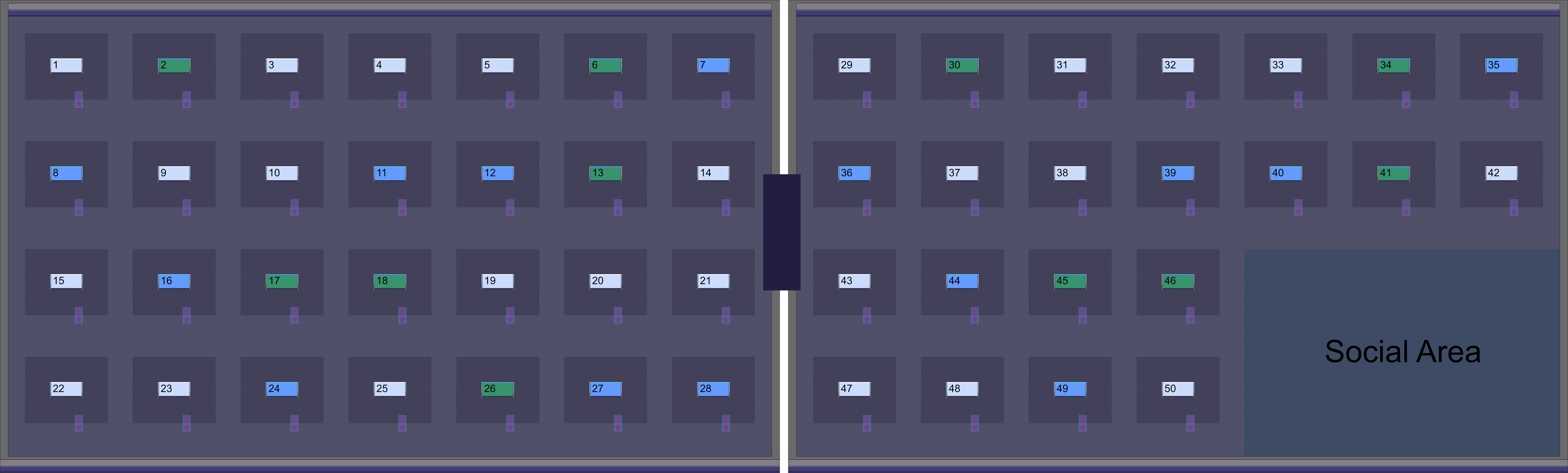

We will have two poster sessions in [Gather.Town]. The numbers correspond to the papers' locations during these poster sessions. See map below.Papers accepted for oral presentations

| 19. | Hardware Beyond Backpropagation: a Photonic Co-Processor for Direct Feedback Alignment - Julien Launay, Iacopo Poli, Kilian Müller, Igor Carron, Laurent Daudet, Florent Krzakala, Sylvain Gigan | [Video] |

| 36. | Policy Manifold Search for Improving Diversity-based Neuroevolution - Nemanja Rakicevic, Antoine Cully, Petar Kormushev | [Video] |

| 39. | Randomized Automatic Differentiation - Deniz Oktay, Nick B McGreivy, Joshua Aduol, Alex Beatson, Ryan P Adams | [Video] |

| ZORB: A Derivative-Free Backpropagation Algorithm for Neural Networks - Varun Ranganathan, Alex Lewandowski | [Video] |

Papers accepted for poster presentations

| 1. | A biologically plausible neural network for local supervision in cortical microcircuits - Siavash Golkar, David Lipshutz, Yanis Bahroun, Anirvan Sengupta, Dmitri Chklovskii | |

| 2. | A More Biologically Plausible Local Learning Rule for ANNs - Shashi Kant Gupta | |

| 3. | A Theoretical Framework for Target Propagation - Alexander Meulemans, Francesco S Carzaniga, Johan Suykens, João Sacramento, Benjamin F. Grewe | |

| 4. | Align, then Select: Analysing the Learning Dynamics of Feedback Alignment - Maria Refinetti, Stéphane d'Ascoli, Ruben Ohana, Sebastian Goldt | |

| 5. | Architecture Agnostic Neural Networks - Sabera Talukder, Guruprasad Raghavan, Yisong Yue | |

| 6. | Backpropagation Free Transformers - Dinko D Franceschi | |

| 7. | Biophysical Neural Networks Provide Robustness and Versatility over Artificial Neural Networks - James Hazelden, Michael I Ivanitskiy, Daniel Forger | |

| 8. | BP2T2: Moving towards Biologically-Plausible BackPropagation Through Time - Arna Ghosh, Jonathan Cornford, Blake Richards | |

| 9. | Convolutional Neural Networks from Image Markers - Barbara C Benato, Italos Estilon de Souza, Felipe L Galvao, Alexandre X Falcão | [Video] |

| 10. | Deep Networks from the Principle of Rate Reduction - Kwan Ho Ryan Chan, Yaodong Yu, Chong You, Haozhi Qi, John Wright, Yi Ma | |

| 11. | Deep Neural Network Training without Multiplications - Tsuguo Mogami | |

| 12. | Deep Neural Networks Are Congestion Games - Nina Vesseron, Ievgen Redko, Charlotte Laclau | |

| 13. | Deep Reservoir Networks with Learned Hidden Reservoir Weights using Direct Feedback Alignment - Matthew S Evanusa, Aloimonos Yiannis, Cornelia Fermuller | [Video] |

| 14. | Direct Feedback Alignment Scales to Modern Deep Learning Tasks and Architectures - Julien Launay, François Boniface, Iacopo Poli, Florent Krzakala | |

| 15. | Feature Whitening via Gradient Transformation for Improved Convergence - Shmulik Markovich-Golan, Barak Battash, Amit Bleiweiss | |

| 16. | Front Contribution instead of Back Propagation - Swaroop Ranjan Mishra, Anjana Arunkumar | [Video] |

| 17. | Gated Linear Networks and Extensions - Eren Sezener, David Budden, Marcus Hutter, Christopher Mattern, Jianan Wang, Joel Veness | |

| 18. | Generalized Stochastic Backpropagation - Amine Echraibi, Joachim Flocon-Cholet, Stéphane W Gosselin, Sandrine Vaton | |

| 20. | HebbNet: A Simplified Hebbian Learning Framework to do Biologically Plausible Learning - Manas Gupta, ArulMurugan Ambikapathi, Ramasamy Savitha | [Video] |

| 21. | Hindsight Network Credit Assignment - Kenny Young | [Video] |

| 22. | How and When does Feedback Alignment Work? - Stéphane d'Ascoli, Maria Refinetti, Ruben Ohana, Sebastian Goldt | |

| 23. | Ignorance is Bliss: Adversarial Robustness by Design through Analog Computing and Synaptic Asymmetry - Alessandro Cappelli, Ruben Ohana, Julien Launay, Iacopo Poli, Florent Krzakala | |

| 24. | Improving Multimodal Accuracy Through Modality Pre-training and Attention - Aya Abdelsalam A Ismail, Faisal Ishtiaq, Mahmudul Hasan | |

| 25. | Investigating Coagent Networks for Supervised Learning - Dhawal Gupta, Matthew Schlegel, James Kostas, Gabor Mihucz, Martha White | |

| 26. | Investigating the Scalability and Biological Plausibility of the Activation Relaxation Algorithm - Beren Millidge, Alexander D Tschanz, Anil Seth, Christopher Buckley | |

| 27. | Layer-wise Learning of Kernel Dependence Networks - Chieh Tzu Wu, Aria Masoomi, Arthur Gretton, Jennifer Dy | [Video] |

| 28. | Layer-wise Learning via Kernel Embedding - Aria Masoomi, Chieh Tzu Wu, Arthur Gretton, Jennifer Dy | [Video] |

| 29. | Learning Flows By Parts - Manush Bhatt, David I Inouye | [Video] |

| 30. | MEAL V2: Boosting Vanilla ResNet-50 to 80%+ Top-1 Accuracy on ImageNet without Tricks - Zhiqiang Shen, Marios Savvides | |

| 31. | Menger: Large-Scale Distributed Reinforcement Learning - Amir Yazdanbakhsh, Junchao Chen, Yu Zheng | |

| 32. | Meta-Learning Backpropagation And Improving It - Louis Kirsch, Jürgen Schmidhuber | [Video] |

| 33. | MPLP: Learning a Message Passing Learning Protocol - Ettore Randazzo, Eyvind Niklasson, Alexander Mordvintsev | [Video] |

| 34. | Neighbourhood Distillation: On the benefits of non end-to-end distillation - Laëtitia M Shao, Max Moroz, Elad Eban, Yair Movshovitz-Attias | |

| 35. | Optimizing Neural Networks via Koopman Operator Theory - William T Redman, Akshunna S. Dogra | |

| 37. | Predicting Pretrained Weights of Large-scale CNNs - Boris Knyazev, Michal Drozdzal, Graham Taylor, Adriana Romero | [Video] |

| 38. | PyTorch-Hebbian: facilitating local learning in a deep learning framework - Jules Talloen | |

| 40. | Scaling Equilibrium Propagation to Deep ConvNets by Drastically Reducing its Gradient Estimator Bias - Axel Laborieux, Maxence M ERNOULT, Benjamin Scellier, Yoshua Bengio, Julie Grollier, Damien Querlioz | [Video] |

| 41. | Scaling up learning with GAIT-prop - Sander Dalm | |

| 42. | Self Normalizing Flows - Thomas A Keller, Jorn W.T. Peters, Priyank Jaini, Emiel Hoogeboom, Patrick Forré, Max Welling | [Video] |

| 43. | Short-Term Memory Optimization in Recurrent Neural Networks by Autoencoder-based Initialization - Antonio Carta, Alessandro Sperduti, Davide Bacciu | [Video] |

| 44. | Slot Machines: Discovering Winning Combinations of Random Weights in Neural Networks - Maxwell M Aladago, Lorenzo Torresani | |

| 45. | Supervised Learning with Brain Assemblies - Akshay Rangamani, Anshula Gandhi | [Video] |

| 46. | Symbiotic Learning of Dual Discrimination andReconstruction Networks - Tahereh Toosi, Elias B Issa | |

| 47. | The Interplay of Search and Gradient Descent in Semi-stationary Learning Problems - Shibhansh Dohare, Rupam Mahmood, Richard S Sutton | [Video] |

| 48. | Towards self-certified learning: Probabilistic neural networks trained by PAC-Bayes with Backprop - Maria Perez-Ortiz, Omar Rivasplata, John Shawe-Taylor, Csaba Szepesvari | |

| 49. | Towards truly local gradients with CLAPP: Contrastive, Local And Predictive Plasticity - Bernd Illing, Guillaume Bellec, Wulfram Gerstner | [Video] |

| 50. | Unintended Effects on Adaptive Learning Rate for Training Neural Network with Output Scale Change - Ryuichi Kanoh, Mahito Sugiyama | [Video] |

Accepted papers will be presented during the poster sessions in [Gather.Town]. This is the map:

Reviewers

We would like to thank all of our reviewers for their great work: Andrea Zugarini, Apoorva Nandini Saridena, Devansh Bisla, Enrico Meloni, Francesco Giannini, Gabriele Ciravegna, Giovanna Dimitri, Giuseppe D'Inverno, Giuseppe Marra, Haoran Zhu, Jing Wang, Joao Carreira, Lapo Faggi, Laura Sanabria-Rosas, Lisa Graziani, Maryam Majzoubi, Shihong Fang, Takieddine Mekhalfa, Yunfei Teng